Why we love Crons

Cron Jobs are one of the basic building blocks of modern software. They are frequently one of the first tasks that we run outside of our software and an easy go-to when we need a to run a script or quickly put something into production.

- running a nightly script

- moving data from one place to another on a regular schedule

And, frequently we use crons as part of larger processes like checking on an API/database regularly to see if information has changed so that we can do something else with it.

Crons are mostly reliable and predictable, easy to implement and run on our existing infrastructure.

But, depending on what we are using them for, there are some drawbacks:

- Its hard to have a clear overview of all of our cron jobs that are running

- It can be annoying to troubleshoot when an error or timeout happens

- Out of the box alerting is limited so we need to build our own if we want something useful.

- It can be hard to scale and have related tasks

- We can run into a lot of problems when we have multi-step processes with related tasks and multiple external services.

So we will walk you through the following scenarios and show how to replace crons by implementing Zenaton tasks (and even a workflow) for each of these scenarios:

- recurring tasks

- asynchronous jobs - offloading lower priority tasks in the background

- multi-step processes when you are using crons to check a database or API to see if something has changed.

Getting Started with Zenaton

In order to run a task or workflow you need a Zenaton account and have the Zenaton agent and SDK installed in your Zenaton project which can be hosted locally or on your preferred hosting solution.

Scenario 1: recurring single tasks

The most common cron usage is to run a nightly (or periodic) script to do something like:

- move data between internal services

- pull in data from a third party reporting API to create reports

- send a batch of emails or communications

Normally, you would add an entry in the cron tab with the schedule and a path to a script. Then it would run on your infrastructure.

Run a recurring task with Zenaton:

We will create a Zenaton task and schedule it using the schedule function that is compatible with cron expressions.

const { task } = require("zenaton");

module.exports = task("SalesReport", {

async handle(...input) {

// your existing script

}

});In a second script we will create the schedule for the task using the schedule function and cron expression daily at 4am.

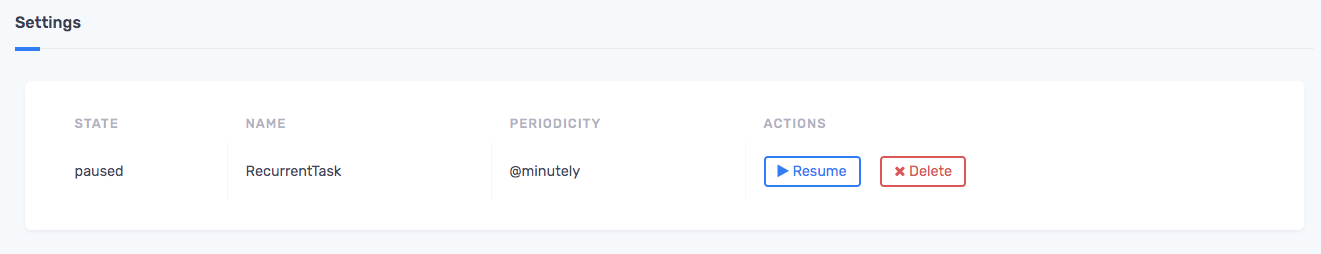

client.schedule('0 4 * * * *').task("SalesReport");Now we can check the Schedules tab on our dashboard to see all of the Schedules we have created and if needed, we can pause, resume or delete/cancel them here.

Adding in Automatic Retry:

If this script hits an API that is intermittent or unreliable we might want to add in an automatic retry so that the task will retry if there is an error. This will allow the task to run instead of having to wait until the next recurrence on the next day.

Automatic retries can also be useful if there is a danger of rate limiting where we have maxed out and want to rerun the script a little later so that it can complete.

const { task } = require("zenaton");

module.exports = task("SalesReport", {

async handle(...input) {

// your existing script

}

onErrorRetryDelay(e) {

// Retry at most 3 times, with an increasing delay between each try.

const n = this.context.retryIndex;

if (n > 3) {

return false;

}

return n * 60;

}

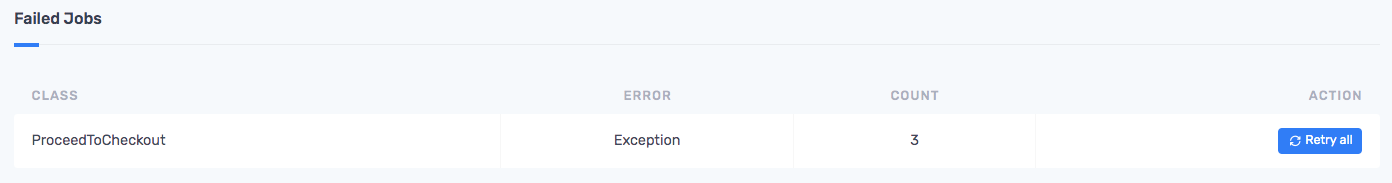

});If the task still fails after being automatically retried 3 times, we will receive an error alert and will be able to view the stacktrace and details on the dashboard, investigate further and manually retry when we are ready.

Scenario 2: Asynchronous jobs

[note that you can also use a simple background jobs manager for this]

Another type of job that we commonly use crons for are asynchronous tasks (also known as background jobs) that off-load work from our main server and are not time sensitive.

These might include:

- resizing uploaded images

- querying a database periodically to see if a task needs to be run

- sending notification emails or alerts when something happens.

Normally, you would add an entry in the cron tab to check the database periodically to see if there is data and then run the script. Then the script would run on your infrastructure.

Running asynchronous jobs with Zenaton

Instead with Zenaton we can dispatch a task when it is needed instead of saving information to the database.

So for example when a user uploads an image, instead of saving the information to a database, we would add the Zenaton code to dispatch the task which would be executed on our worker via the Zenaton agent.

Here is the code that we would write around our current script which we are calling MyAsyncJob.

const { task } = require("zenaton");

module.exports = task("MyAsyncJob", {

async handle(...input) {

// your existing script

}

onErrorRetryDelay(e) {

// do not automatically retry for some kind of errors.

if (e instanceof ImageLibError) {

return false;

}

// For other errors, retry at most 3 times, with an increasing delay between each try.

const n = this.context.retryIndex;

if (n > 3) {

return false;

}

return n * 60;

}

});Then, we would dispatch our task with the following script.

client.run.task("MyAsyncJob", ...input);A few of the benefits of using Zenaton here instead of running crons:

- we eliminate load on our database since we no longer need to check every 5 minutes

- we can now distribute the load on our worker fleet: 1 worker to send high priority, low latency tasks like sending emails and a different worker to run the heavier tasks that are lower priority such as image resizing.

- alerting — and of course, we will get an alert if the task failed so that we can retry or investigate.

How to troubleshoot:

For example, once in a while, an image resize task fails. By checking the stack trace on our dashboard, we might find that some image formats are not available. Once we have fixed and deployed a new version of our code, we can click retry on our failed task to resume the work.

Scenario 3 : Multi-step workflow

Some of the cron scripts that we run are part of a multi-step processes. Some steps may run or not depending on the outcome of previous steps or under certain conditions:

How to implement scheduling, logic and conditions:

Normally, you would add an entry in the crontab with the schedule and a path to a script. Then it would run on your infrastructure.

Instead with Zenaton, we can easily run this sequence of steps as a workflow.

A workflow describes a sequence of steps or tasks. The steps do not have to be sequential, they can also be triggered by events.

In order to write our sequence of steps, we will:

- write a task for each step

- write a workflow to orchestrate those steps

The example we would take here is an example of an ETL job. It could be all in one single task but you will see why it's better to build as a workflow.

- pull data from many sources

- crunch and aggregate some data

- generate report

- Notify people

- Ask for human validation

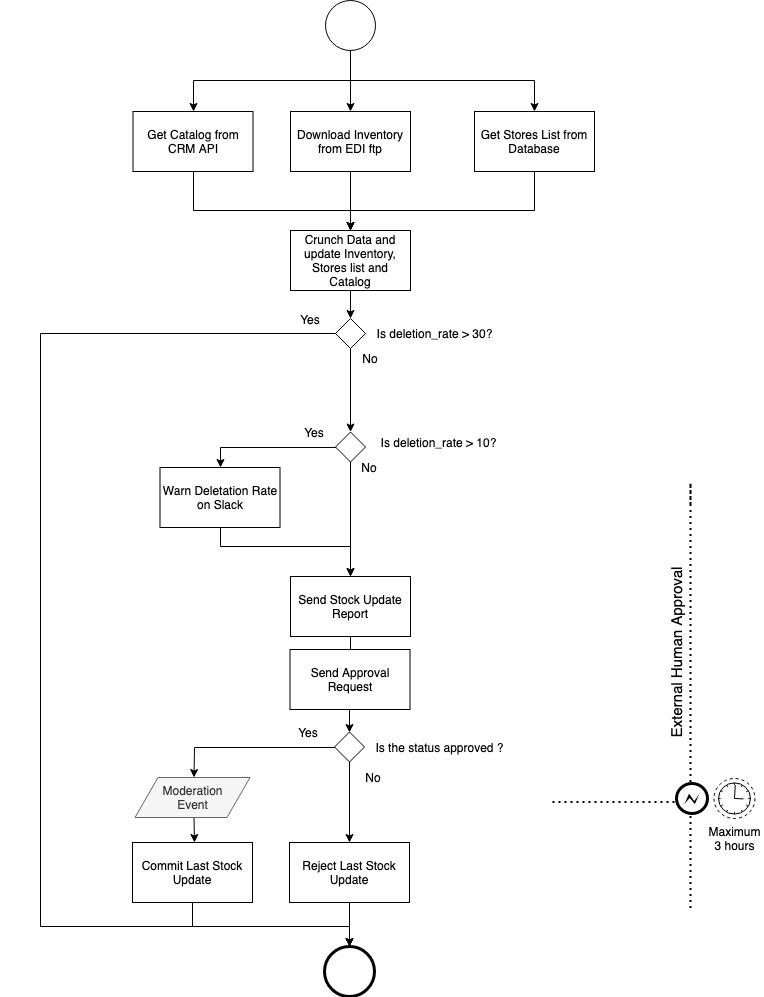

- We will fetch data from many sources: a product catalog form, a CRM, inventory update from an FTP, csv file, and finally a list of stores in our database.

- Then for each store we process and aggregate the data and send a report with metrics about the updates.

- We can send an alert or even stop the whole process if there are too many missing products.

- Last, we need to get approval from someone in the organization which we will wait for and receive as an external event when it is approved.

const { workflow, duration } = require("zenaton");

module.exports = workflow("UpdateStocks", function* () {

// (1) Fetch all the data you need in parallel

const [catalog, inventory, stores] = yield this.run.tasks(

["GetCatalogFromCRMapi"],

["DownloadInventoryFromEDIftp"],

["GetStoresListFromDatabase"]

);

// (2) foreach store

for (let store of stores) {

// (3) crunch data

let updates = yield this.run.task("UpdateStoreInventory", store, catalog, inventory);

const deletion_rate = (updates.nb_deletion / catalog.length) * 100;

// (4) this would trigger our alerting system and you will be instantly notified.

if (deletion_rate > 30) {

throw new Error('Too much deletion, stock update stopped.');

}

// (5) Asynchronously warn the team via slack.

// if there is more than 10% of deletion, warn the team via slack

if (deletion_rate > 10) {

this.run.task("WarnDeletionRateOnSlack", store, deletion_rate);

}

// (6) send the update report

this.run.task("SendStockUpdateReport", store, updates);

};

// (7) Notify a manager reveiw and approve or reject this update.

this.run.task("SendApprovalRequest");

// (8) External human approval

const [moderation, status] = yield this.wait.event("ModerationEvent").for(duration.hours(3));

if (moderation && status == 'approved') {

yield this.run.task("CommitLastStockUpdate");

} else {

yield this.run.task("RejectLastStockUpdate");

}

});Flowchart of the multi-step workflow:

This flowchart helps to visualize the tasks and logic of the workflow.

Benefits:

All of the logic is in one place

Since we are using our native programming language we can incorporate loops, try/catch or whatever code structure that makes sense and we can also use all the libraries that we need.

Also the Zenaton SDK provides the flexibility to use

- parallel execution

- synchronous or asynchronous execution

- wait for specific events (instead of having to check to see if it has changed)

Code overview:

(1) Here we take advantage of the parallel syntax, to fetch data from different sources in parallel.

(2) We can use loop and any other code structure

(3) We spin up a new task synchronously using the yield keyword. We choose synchronous because we need it's returned result later on

(4) We can use whatever logic we want, here we choose to throw an exception to trigger the alerting feature to be instantly notified.

(5) We can spin an asynchronous task by omitting yield. We chose asynchronous this time because we don't need to wait for it's output or it's termination. We can continue doing other things in the meantime.

(6) We just spin up a new task to send a report to each store manager.

(7) We spin up an asynchronous task to ask the director of all stores, to approve or reject the new updates.

(8) We wait for a maximum of 3 hours for the ModerationEvent . If we receive this event and it's approved then we can commit the update, in any other case we reject the update.